来自:venturebeat

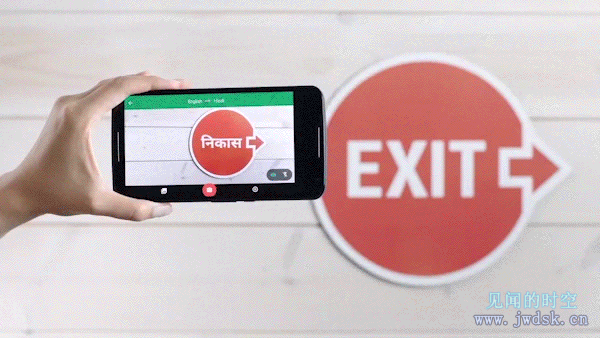

Google Translate now provides instant visual translations in 27 languages on iOS and Android

Google today announced that within the next few days its Google Translate app for iOS and Android will be able to give users immediate visual translations of text in 27 languages.

谷歌今天宣布,其公司在iOS和Android上的谷歌翻译应用程序将在未来几天后能够给用户27种语言的即时视觉文本翻译。Instant visual translation is now available for Bulgarian, Catalan, Croatian, Czech, Danish, Dutch, English, Filipino, Finnish, French, German, Hindi, Hungarian, Indonesian, Italian, Lithuanian, Norwegian, Polish, Portuguese, Romanian, Russian, Slovak, Spanish, Swedish, Thai, Turkish, and Ukrainian, according to a blog post today from Google Translate product lead Barak Turovsky. Meanwhile, the app will soon be able to provide real-time translation of speech in 32 languages, Turovsky wrote.

据谷歌翻译产品的领导巴拉克托洛茨基在其今天发布的博客写道,即时视觉翻译现在在下列语言可用:保加利亚语,加泰罗尼亚语,克罗地亚语,捷克语,丹麦语,荷兰语,英语,菲律宾语,芬兰语,法语,德语,印度语,匈牙利语,印度尼西亚语,意大利语,立陶宛语,挪威语,波兰语,葡萄牙语,罗马尼亚语,俄罗斯语,斯洛伐克语,西班牙语,瑞典语,泰语,土耳其语,乌克兰语。同时,该应用程序很快将能够提供32种语言的即时语音翻译,托洛茨基写道。

The news comes six months after Google first introduced instant visual translation in Translate, and a little over a year after Google’s acquisition of Quest Digital.

这一消息是在谷歌首次推出即时视觉翻译的六个月后,也是谷歌收购“探索数字”的一年多后。

People using the app can try out the support for the new languages by downloading a language pack for each language. From there, the app can work even when the mobile device it’s running on has no Internet connection.

该应用程序的使用者可以尝试通过下载一个语言包为每一种语言提供新语言的支持。安装后,该应用程序即可正常工作,即使是运行在没有联网的移动设备。

Google Translate can do this by relying on an increasingly trendy type of artificial intelligence called deep learning.

谷歌翻译此功能的实现,是依靠日益流行的被称为深度学习的人工智能。

Google has trained its artificial neural network — a key technology for deep learning — on images showing letters as well as on fake images marred by imperfections, to simulate real-life scenes. From there, the Google Translate app looks up the letters in order to make an inference, or educated guess, about the words that the mobile device’s camera was pointed at.

谷歌已完善其人工神经网络——深度学习的一个关键技术——在图像显示文字以及图片受到的缺陷,以模拟现实场景。通过此方法,谷歌翻译应用程序将移动设备摄像头所捕捉到的单词,查找句子以构成推断或有依据的猜测。

Historically, this sort of complex processing would happen in a remote data center, scaled out onto several servers. But Google built a very small neural network and a carefully curated training data set. That way, the computing can happen on a mobile phone with limited processing power and little if any connection to the Internet. And that’s significant.

历史上,这种复杂的处理将发生在一个远程数据中心,扩展到多个服务器。但谷歌建立了一个微型神经网络和一个精心策划的训练数据集。这样,计算就可以运行在一台连接到互联网的处理能力有限的手机中。这很重要的。

Google Research has more about the work in a new blog post.

谷歌研究在其最新博客文章中有更多关于此项技术的介绍。